Text Generation with Custom HuggingFace Model¶

In this demo we will:

Launch a pretrained a custom text generation HuggingFace model in a Seldon Deployment

Send a text input request to get a generated text prediction

The custom HuggingFace text generation model is based on the TinyStories-1M model in the HuggingFace hub.

Create a V1 Seldon Deployment¶

On the

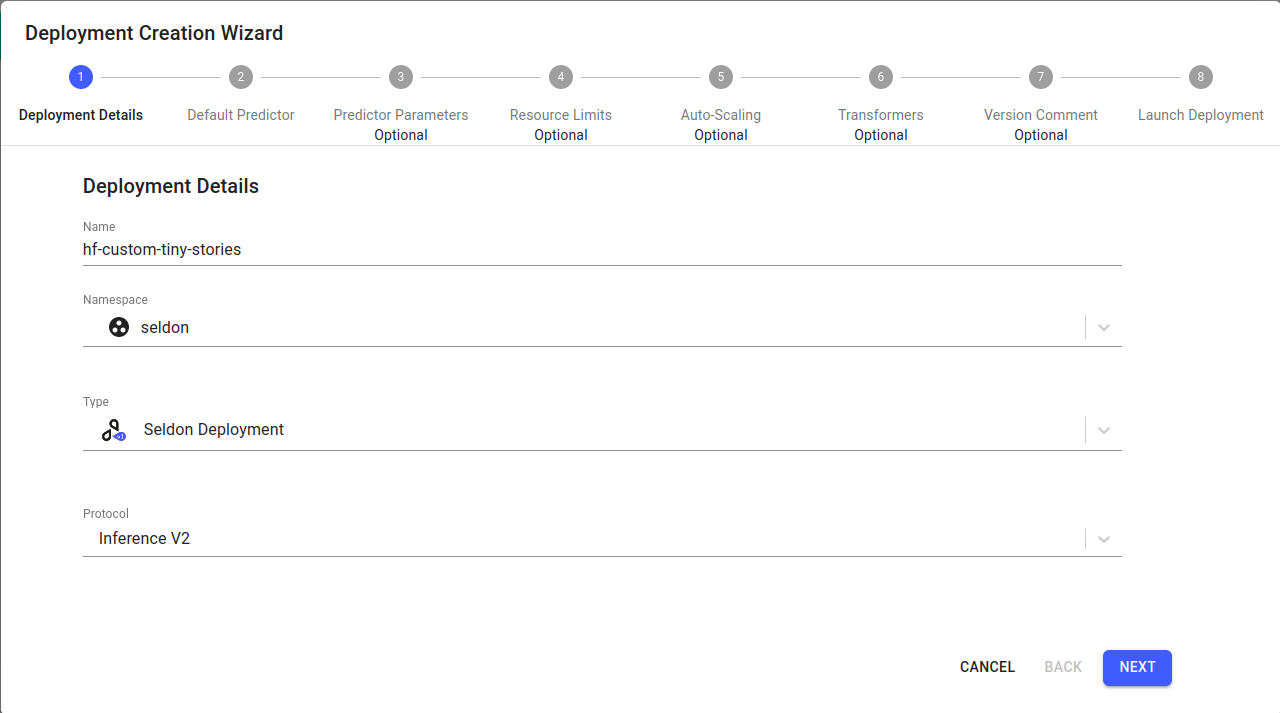

Overviewpage, click onCreate new deployment.Enter the deployment details as follows, then click

Next:Parameter

Value

Name

hf-custom-tiny-stories

Namespace

seldon [1]

Type

Seldon Deployment

Protocol

Inference V2

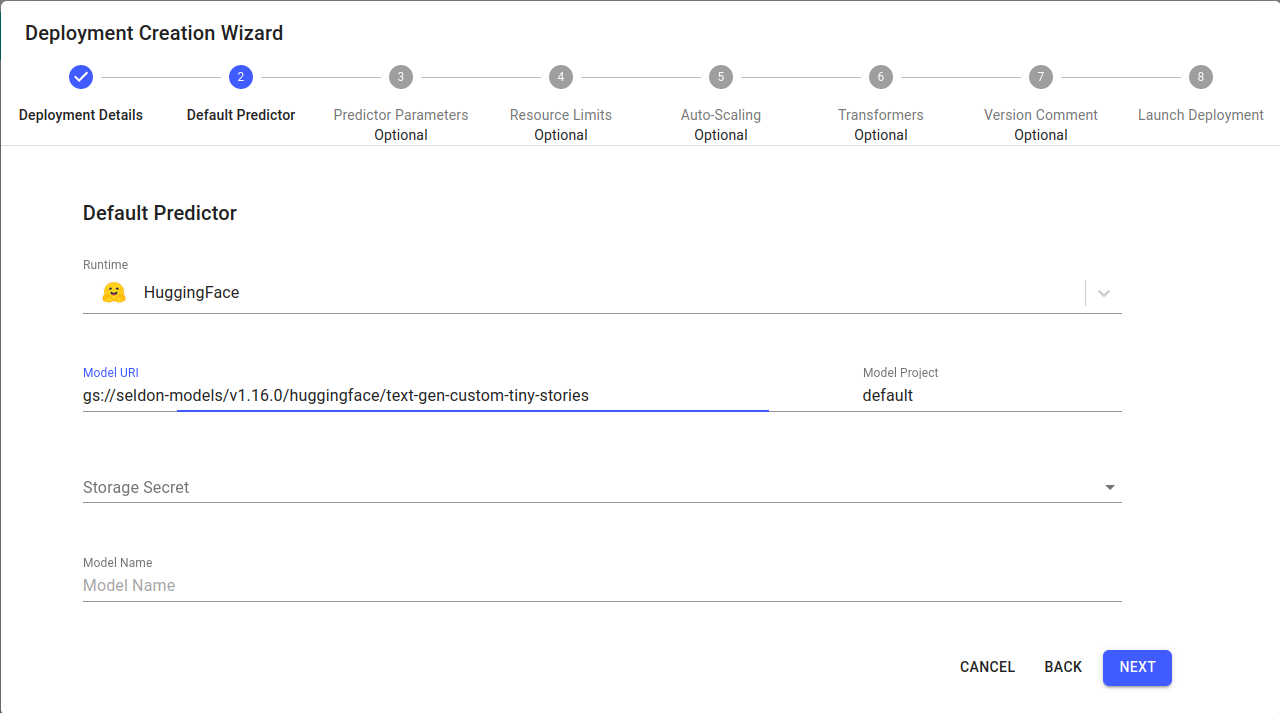

Configure the default predictor as follows, then click

Next:Parameter

Value

Runtime

HuggingFace

Model Project

default

Model URI

gs://seldon-models/v1.18.1/huggingface/text-gen-custom-tiny-storiesStorage Secret

(leave blank/none) [2]

Model Name

(Leave blank)

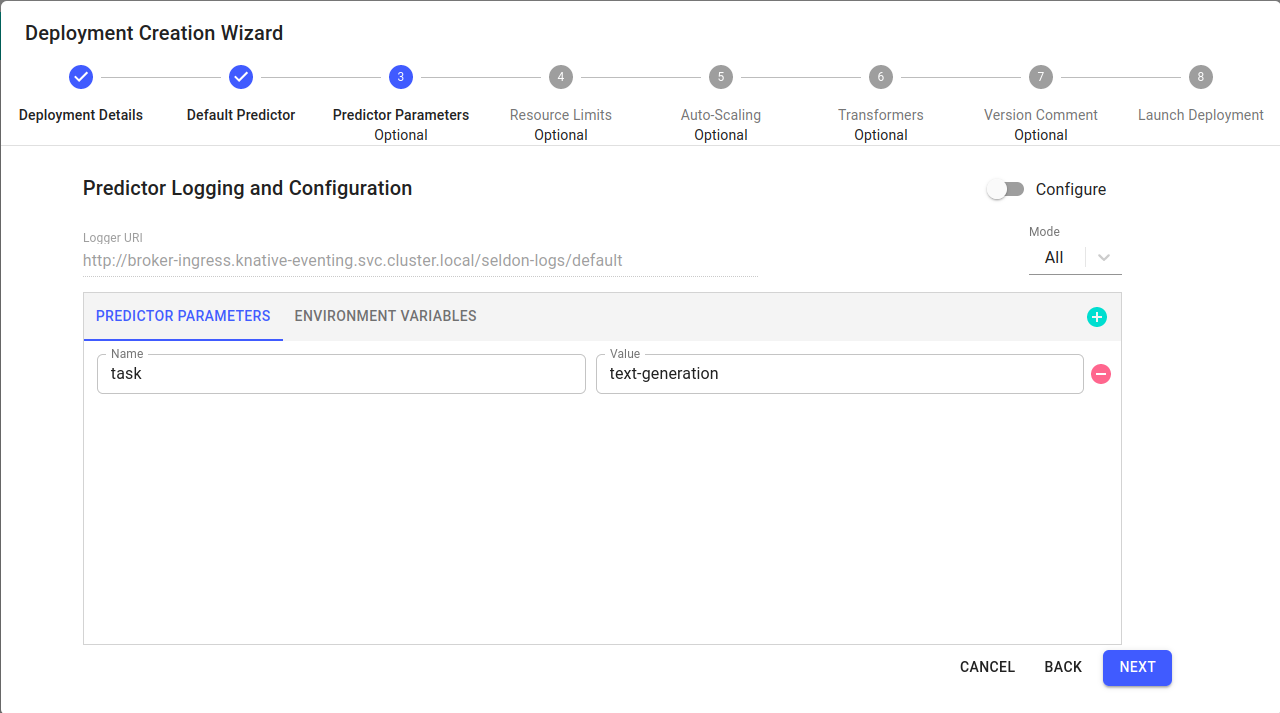

Fill in the predictor parameters as follows:

Predictor Parameters

Value

task

text-generation

Click

Nextfor the remaining steps[3], then clickLaunch.

seldonand

seldon-gitopsnamespaces are installed by default, which may not always be available. Please select a namespace which best describes your environment.

2. A secret may be required for private buckets.

3. Additional steps may be required for your specific model.

Get Prediction¶

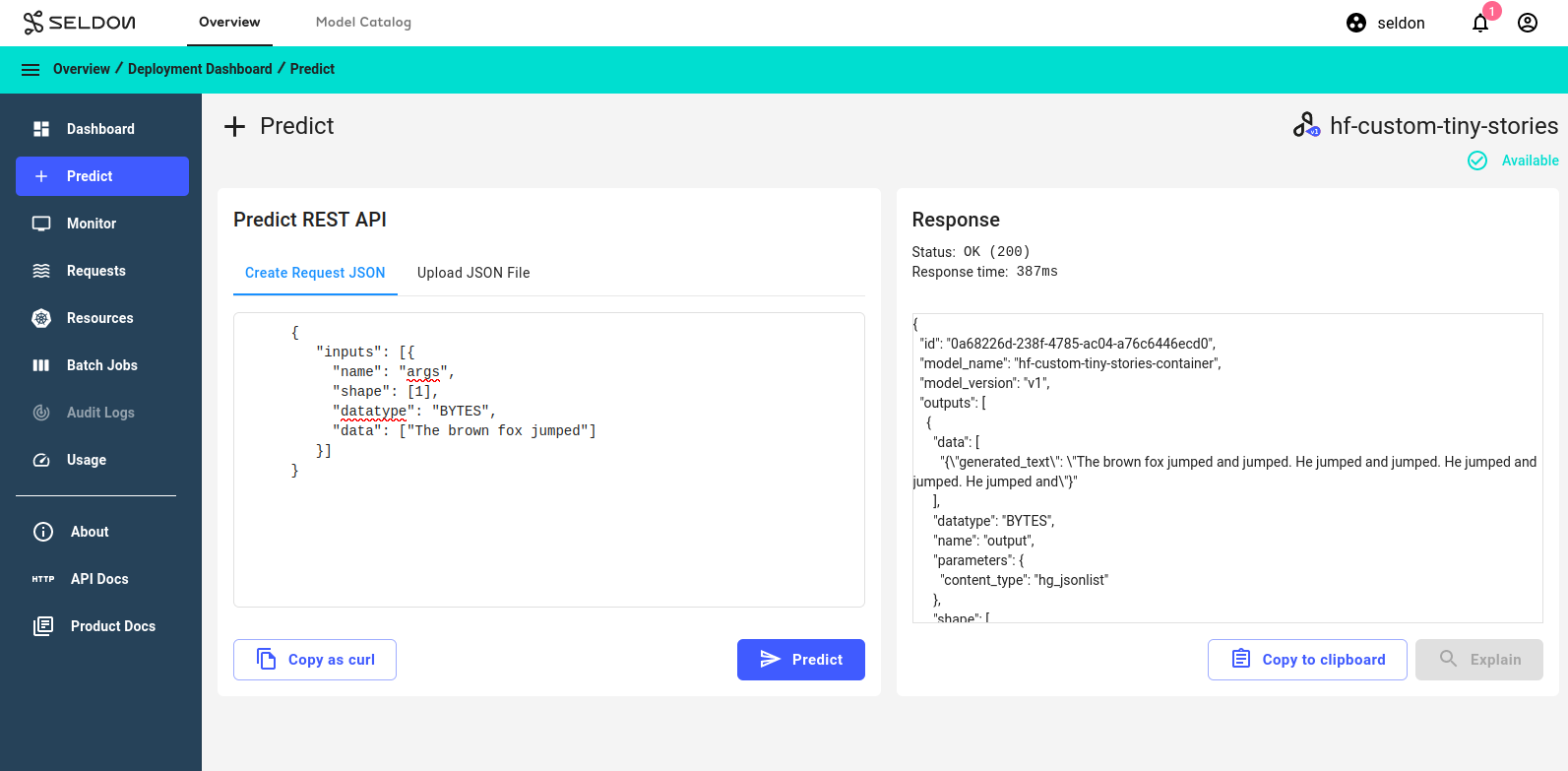

Click on the

hf-custom-tiny-storiesdeployment created in the previous section to enter the deployment dashboard.Inside the deployment dashboard, click on the

Predictbutton.On the

Predictpage, enter the following text:{ "inputs": [{ "name": "args", "shape": [1], "datatype": "BYTES", "data": ["this is a test"] }] }

Click the

Predictbutton.

Congratulations, you’ve successfully sent a prediction request using a custom HuggingFace model! 🥳

Next Steps¶

Why not try our other demos? Or perhaps try running a larger-scale model? You can find one in gs://seldon-models/v1.18.1/huggingface/text-gen-custom-gpt2. However, you may need to request more memory!